Hadoop Assignment Help

Hadoop Assignment Help Expert: An Overview Of Hadoop

Hadoop is Open source Apache Framework which is written in java programming language. Hadoop allow distributed processing of large datasets across many computers which using programming models. Environment provides distributed storage and computation across clusters of computers. It is designed to scale up from single server to thousands of machine using the environment that we discuss above.

In 2006, Cutting join Yahoo and took with him the Nutch project which is further divided and hadoop is one of its part which is a distributed and processing portion. In 2008, Yahoo released Hadoop as an open-source Project. Now, Apache Software Foundation (ASF) maintain and managed the framework and ecosystem of technologies of hadoop which is a global community of software developers.

Prerequiste of this webpage is a sound knowledge of Hadoop Ecosystem

Here is really basic level of video which you should look before proceedong ahead

Why is Hadoop Important?

- Scalability: Little administration is required to easily grow your system to handle more data by adding more nodes.

- Low Cost: Because this is an open-source framework and uses commodity hardware to store large data.

- Fault Tolerance: Data and application protected against hardware failure like if a node goes down jobs are automatically redirected to other nodes to make sure that any node does not fail for that multiple copies are stored.

- Computing Power: This means to processes big data. Computing nodes is directly proportional to processing power, the more computing nodes you have the more will be the power.

- Ability to process huge amount of data of any kind, quickly: As we know data is constantly increasing on social media especially , that’s a key consideration.

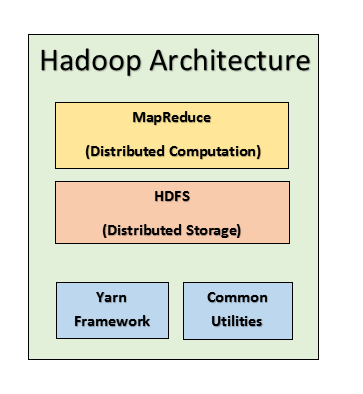

Hadoop Architecture

Framework Includes four modules:Hadoop common:

These contain java libraries which provides filesystem and OS level abstraction and contain necessary java files and scripts required to start hadoop.

Hadoop YARN:Framework for job scheduling and cluster resources management.

Hadoop Distributed File System(HDFS):This is a file system that provides high throughput access to application data.

Hadoop MapReduce:MapReduce is YARN based system for parallel processing of large data sets.

Since 2012, the term “Hadoop” often refers not just to the base modules mention above but also to the collection of additional software packages that can be installed on the top of or alongside Hadoop, such as Apache Pig, Apache Hive, Apache HBase, Apache spark etc.

MapReduce

It is a software framework for easily writing application to process big amount of data parallel on large clusters in a reliable hardware and in fault-tolerate manner. The term mapreduce refers to two different tasks that perform by hadoop program.- Map task: This task takes input data and covert in into a set of data, where individual element broken down into key pairs.

- Reduce task: this task correlates to map task in which it takes output of the map task and combines those into smaller tuples.

Both input and output are stored in the file system and take care of scheduling tasks, monitoring them and re-execute the failed tasks. This framework consist of a single master JobTracker and one slave TaskTracker it is a single point failure for the hadoop mapreduce means if it is goes down then the running all the tasks are halted. Master is responsible for scheduling the task for the tracker and the slave executes those task that are assigned by the master and provide task status periodically.

Hadoop Distributed File System(HDFS)

This is most common file system used by hadoop it is based on google file system(GFS). Uses Master slave architecture in which master consist of singleNameNode that manage the file system metadata and another one is slaveDataNodes that store the actual data. HDFS namespaces is split into several blocks and those blocks are stored in a set of DataNodes, it also take care of read and write operation in the file system. The NameNodes determine the mapping of blocks to the DataNodes,it also take care of block creation ,deletion and replication based on the instruction given by NameNode. HDFS provide shell like file system in which the list of commands interact with the file system.

Working of Hadoop.

- Stage1: In this stage user/application can submit job to the hadoop(job client) with his specifications like: location of the input and output file in DFS, Java classes in the form of jar files which containing the implementation of MapReduce Function.

- Stage2: In this stage hadoop job client submits the job to the JobTracker which then compile the responsibilities like distributing software to the slaves, scheduling tasks and monitoring them, providing status and diagnoist information to the job-client.

- Stage3: In the stage TaskTracker executes the task as per MapReduce implementation and output the reduce function in the output file of file system.

Advantages of Hadoop.

- Framework allows the user to quickly write and test distributed systems which is efficient, and automatic distributes data and work across the nodes, utilizes the underlying parallelism of the CPU cores.

- It does not rely on the hardware to provide fault-tolerance and high availability, rather itself designed and detect the future failure and handle it.

- Servers can be added or removed from the clusters dynamically and hadoop continues to work without interruption.

- Big advantage is that apart from open source, it is compatible with all the type of platforms since it is java based.

4 Frameworks Which Our Hadoop Assignment Help Experts Use Frequently

PIG- PIG stands for Pig Latin. It is a programming language developed for data manipulation. You can process or extract required data easily and effectively with this programming language. It is a data flow system built on Hadoop. PIG is excellent for ad hoc data analysis and is easily extended using user-defined functions.

HIVE – HIVE is a data warehouse solution that can process data stored in HDFS by using HQL (Hive Query Language). HQL is very similar to SQL, which makes it easier to convert and show data that is processed into HIVE.

IMPALA- IMPALA is a SQL query engine by Cloudera. It’s a memory- and CPU-bound system which consumes data stored in Hadoop's distributes file system (HDFS). It is one of the fastest and most powerful processing tools. It relies on metadata about tables and maps data to processors with minimal data movement for the highest performance possible.

SPARK- Spark is a big data processing engine built by a top San Francisco-based start-up. Spark was initially launched to accelerate Hadoop processing speed by orders of magnitude. It’s not a Hadoop replacement but it is compatible with the Hadoop Distributed File System (HDFS). Spark has APIs in Java, Python, Scala, and R.

These are only some of the features which are incorporated and discovered to ease the process of storing and processing large-scale raw data because it becomes extremely difficult to store and process data when the size exceeds 20TB. As the size grows, it becomes an issue to manage resources, connect to databases, store, and process. Organizing big data requires a high degree of effort, processing power, and specialized tools such as Hadoop. Also, during the Hadoop course, students are expected to master the recommended frameworks and technologies.

Place Your Order Now and Get Hadoop Assignment Help at Discounted Price

If you wish or want to achieve excellence in this subject or in the course of your study, then seek help from AssignmentHelp.Net without a second thought, because our professionals know that getting good grades in a few weeks is not an easy task but they are experienced and they can do all the hard tasks easily and effectively. And by ordering Hadoop assignment help from us, you get additional benefits such as:

Plagiarism-free content: We provide plagiarism-free content because the content provided by us is written by our experienced professionals who have been working in this field for a long time and provide their valuable ideas and information so that we can provide quality content to our students.

Topics Covered in MapReduce Hadoop Assignment Help Services

MapReduce takes over the pain of sifting through data and doing complicated mathematical calculations. While students may not be able to appreciate this, our MapReduce Hadoop assignment help services teach them all about this powerful tool such as what a map and reduce algorithm means, by map key and map value s we mean. Students forget about the complications when they have to craft assignments with all that information and details, just call us and we will take the better into our hands. Here are some of the topics covered by us:

- Basics and Introduction to MapReduce Hadoop Framework

- Data-Parallel Processing with MapReduce

- Google MapReduce and Data Flow

- Architecture of MapReduce

- Shuffling and Sorting in MapReduce

- Basic MapReduce API

- Application of MapReduce and Cluster Configuration

- Common Data Problems in MapReduce

- Important Components in MapReduce

- Modes of Operation

- Usage of Distributed Cache

- Types of Data Formats in MapReduce

Best Help for Attaining brill Results in MapReduce Hadoop Examination

While we cannot guarantee a 100% score in the online MapReduce Hadoop examination, we promise that by using the MapReduce Hadoop homework help services from us you will have a good foundation and knowledge about the subject that will parallel with the best students in your class. Just air your concerns to our customer care representatives and we will get together a team of highly talented online MapReduce Hadoop assignment help experts from across the world.

MapReduce programming is one of the most important techniques in Hadoop programming and it helps you to be able to work with a large amount of data. MapReduce programming is used to process a large amount of data with the help of multiple nodes. Hadoop 3.2 has a new feature i.e. DataMedi Streaming, which allows you to be able to work with live data. DataMedi streaming is a new feature of Hadoop 3.2, which helps you to be able to work with a large amount of live data. Hadoop supports MapReduce programming and it runs on the basis of Master-slave architecture. In Hadoop programming, MapReduce programming is the most popular technique used for a large amount of data processing.

MapReduce Hadoop Assignment Help By Big Data Experts at AssignmentHelp

Introduction of MapReduce:

MapReduce is a Java programming model or API which is developed in 2005 by the Apache Software Foundation for processing and generating large data sets with parallel processing. With a single instruction, it processes and analyses a large amount of data. In Hadoop, MapReduce is implemented as a part of the Apache Software Foundation. MapReduce is believed to be one of the most powerful tools for big data processing. It provides you with two functions i.e. Map and Reduces, which are used to process, filter, and group data. In the map function, the query is applied to input data in various formats such as blocks, files, and databases. Each key-value pair is handled, processed, and formed by the map function. Reduce function works on the output generated by the Map function, with an instruction to combine sets of key-value pairs into a smaller set of key and value pairs respectively.

MapReduce is designed on the philosophy of feature extraction and data transformation. Any large dataset can be marked as an output of a map function when information is stored in the form of key and value pairs. The Reducer works by taking the output of a map function and matching it to the required result. In addition, for a specific key, the reducer can generate two key-value pairs. Different keys generate different key-value pairs. In MapReduce, MapReduce works by taxing data sets on a cluster of many nodes at a time. The MapReduce programming model may be programmed, composed, and tested using an omnipresent programming language like Ruby, Python, or any other, but it can also be implemented using Java or any other language that can distinguish and produce a Hadoop streaming temple.

Features of Our MapReduce and Hadoop Assignment Help:

MapReduce and Hadoop assignment help services help students in doing the assignments in the best possible way. MapReduce helps you to achieve significant results. We give importance to three things i.e. precision, quality, and on-time delivery. We are very responsible and accountable for our commitments to our students. We give the assurance that our assignment help services will be authentic, original, and of the highest quality. We have certain terms and rules which our writers have to follow before they start writing any assignment help. They cannot even copy the styles of others. These assignments help services are affordable and can be affordable to staff and students both. We have designed a search engine to find the best writers and a booking system to provide you with fast and reliable MapReduce assignments. We maintain a strong anti-plagiarism policy. We work for the best of our students. And not only this, but we also provide 24/7 support to help students can make inquiries about their assignments anytime, anywhere.

To get help in Bigdata-Hadoop Project Contact Us

CSCI312 Big Data Management

References:2. https://www.assignmenthelp.net/document/see-the-output-run-the-following-commands-cat-user/64c4decb6f2c30b50d6f55ec

3. https://www.assignmenthelp.net/document/conf-fsdatainputstream-null-path-path-new-path-ioutils/64815c0dc790bc794fa2a58f

4. https://www.assignmenthelp.net/document/youve-already-seen-the-hdfs-command-shell-action/650602701812b0e4f3fdc9e8

5. https://www.assignmenthelp.net/document/and-the-access-can-later-beon-the-running-system-setting-hadoop/650c4d08e2107e21cfc77b74

6. https://www.assignmenthelp.net/document/nosql-offers-scalability-and-high-performance/6505ec671812b0e4f3fdc1a7

7. https://www.assignmenthelp.net/document/hadoop-testing-and-analytics-with-apache-spark/64c14876de043abb02f973cf

8. https://www.assignmenthelp.net/document/intermediate-keys-and-values-must-support-the-writable-interfac/64bd369fc8372fdf57834d63

9. https://www.assignmenthelp.net/document/edit-command-line-access-job-management/64e5a38b227780bd77d890a0